- バックアップ一覧

- 差分 を表示

- 現在との差分 を表示

- ソース を表示

- 機械学習/MacでTensorFlowを使う へ行く。

- 1 (2016-04-11 (月) 11:02:57)

- 2 (2016-04-11 (月) 11:03:28)

- 3 (2016-04-14 (木) 13:10:45)

- 4 (2016-04-15 (金) 18:48:36)

- 5 (2016-04-16 (土) 09:31:26)

- 6 (2016-04-16 (土) 11:08:22)

- 7 (2016-04-19 (火) 00:10:26)

- 8 (2016-04-19 (火) 00:56:36)

- 9 (2016-04-19 (火) 18:19:26)

- 10 (2016-04-19 (火) 18:19:26)

- 11 (2016-04-19 (火) 18:19:26)

- 12 (2017-06-19 (月) 19:23:31)

- 13 (2017-06-20 (火) 08:33:44)

- 14 (2017-06-20 (火) 08:33:44)

- 15 (2017-07-24 (月) 14:38:39)

はじめに †

ここでは、Googleが公開しているオープン・ソース・ソフトウェア (OSS) のTensorFlowをMac (OS X) にインストールして使います。

以前、TensorFlow 0.8用に書いたものを、TensorFlow 1.2用に修正しました。

環境 †

- macOS Sierra 10.12.5

- Python 3.5.1

- TensorFlow 1.2

Python3のインストール(アップデート) †

まず、Python3を最新版にアップデートします。 下記のサイトからMac OS X 64-bit/32-bit installerをダウンロードして、インストール。

TensorFlowのインストール †

基本的にはここに書いてある通りです。

- Installing TensorFlow on Mac OS X - TensorFlow

$ sudo easy_install pip $ sudo pip3 install --upgrade pip

Virtualenvのインストール †

$ sudo pip3 install --upgrade virtualenv $ virtualenv --system-site-packages -p python3 /Users/Shared/tools/tensorflow $ source /Users/Shared/tools/tensorflow/bin/activate (tensorflow)$ sudo pip3 install --upgrade tensorflow (tensorflow)$ deactivate

私の研究室では、共有フォルダーのtoolsというフォルダー (/Users/Shared/tools/) に、みんなで使うツールをインストールしています。 /Users/Shared/tools/tensorflow を ~/tensorflow などTensorFlowをインストールしたいフォルダーに変更してください。

TensorFlowを動かす †

TesorFlowのサイトに掲載されているサンプルを動かします。

- Getting Started With TensorFlow - TensorFlow

$ source /Users/Shared/tools/tensorflow/bin/activate (tensorflow) $ python3 Python 3.5.1 (v3.5.1:37a07cee5969, Dec 5 2015, 21:12:44) [GCC 4.2.1 (Apple Inc. build 5666) (dot 3)] on darwin Type "help", "copyright", "credits" or "license" for more information.

>>> import tensorflow as tf >>> node1 = tf.constant(3.0, dtype=tf.float32) >>> node2 = tf.constant(4.0) >>> sess = tf.Session() 2017-06-20 08:28:06.121472: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use SSE4.2 instructions, but these are available on your machine and could speed up CPU computations. 2017-06-20 08:28:06.121492: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use AVX instructions, but these are available on your machine and could speed up CPU computations. 2017-06-20 08:28:06.121498: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use AVX2 instructions, but these are available on your machine and could speed up CPU computations. 2017-06-20 08:28:06.121502: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use FMA instructions, but these are available on your machine and could speed up CPU computations. >>> print(sess.run([node1, node2])) [3.0, 4.0] >>> node3 = tf.add(node1, node2) >>> print(sess.run(node3)) 7.0 >>> quit() (tensorflow) $ deactivate

tf.Seesion()の後に警告が出ているのは、CPUのオプション使うともっと速く計算できるようになるよっていうメッセージです。

TensorFlowでカテゴリー分析をやってみる †

TensorFlowの最初のチュートリアルは手書き数字認識のMNISTですが、irisデータセットでカテゴリー分析(分類問題)をやってみます。

データの準備 †

Irisデータセットは、4つの説明変数(花びらの長さ、幅、がく片の長さ、幅)とカテゴリー (setosa, versicolor, virginica) からなるデータセットです。

UCI Machine Learning Repositoryからファイル iris.data をダウンロードします。 中身はこんな感じです。

$ head -5 iris.data 5.1,3.5,1.4,0.2,Iris-setosa 4.9,3.0,1.4,0.2,Iris-setosa 4.7,3.2,1.3,0.2,Iris-setosa 4.6,3.1,1.5,0.2,Iris-setosa 5.0,3.6,1.4,0.2,Iris-setosa

3クラスの分類なので、カテゴリーのラベルを3次元の0/1ベクトルに変換します。 つまり、setosaは 1,0,0、versicolorは 0,1,0、virginicaは 0,0,1 とします。

Irisデータセットには、それぞれ、50個ずつ150個のデータが並んでいるので、次のように変換します。

$ head -50 iris.data | awk -F, '{printf("%s,%s,%s,%s,1,0,0\n",$1,$2,$3,$4);}' > iris.csv

$ head -100 iris.data | tail -50 | awk -F, '{printf("%s,%s,%s,%s,0,1,0\n",$1,$2,$3,$4);}' >> iris.csv

$ head -150 iris.data | tail -50 | awk -F, '{printf("%s,%s,%s,%s,0,0,1\n",$1,$2,$3,$4);}' >> iris.csv

これで、データの準備は完了です。 このデータをdataフォルダーにおいておきます。

カテゴリー分析用ソースコード †

TensorFlowのTutorialsについてる最初の手書き文字データセットMNIST用のサンプルをほとんどそのまま使ったソースコードはこんな感じです。

# Copyright 2016-2017 Tohgoroh Matsui All Rights Reserved. # # Changes: # 2017-06-19 Changed for TensorFlow 1.2 # # # This includes software developed by Google, Inc. # licensed under the Apache License, Version 2.0 (the 'License'); # you may not use this file except in compliance with the License. # You may obtain a copy of the License at # # http://www.apache.org/licenses/LICENSE-2.0 # # Unless required by applicable law or agreed to in writing, software # distributed under the License is distributed on an 'AS IS' BASIS, # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. # See the License for the specific language governing permissions and # limitations under the License. import tensorflow as tf import numpy as np # パラメーター flags = tf.app.flags FLAGS = flags.FLAGS flags.DEFINE_integer('samples', 120, 'Number of training samples.') flags.DEFINE_integer('max_steps', 1001, 'Number of steps to run trainer.') flags.DEFINE_float( 'learning_rate', 0.001, 'Initial learning rate.') flags.DEFINE_float( 'dropout', 0.9, 'Keep probability for training dropout.') flags.DEFINE_string( 'data_dir', 'data', 'Directory for storing data.') flags.DEFINE_string( 'summaries_dir', 'log', 'Summaries directory.') def train(): # CSVファイルの読み込み data = np.genfromtxt(FLAGS.data_dir + '/iris.csv', delimiter=",") # データをシャッフル np.random.shuffle(data) # 訓練データ (train) とテストデータ (test) に分割 x_train, x_test = np.vsplit(data[:,0:4].astype(np.float32), [FLAGS.samples]) y_train, y_test = np.vsplit(data[:,4:7].astype(np.float32), [FLAGS.samples]) # セッション sess = tf.InteractiveSession() # 入力 with tf.name_scope('input'): x = tf.placeholder(tf.float32, shape=[None, 4]) # 出力 with tf.name_scope('output'): y_ = tf.placeholder(tf.float32, shape=[None, 3]) # ドロップアウトのキープ率 with tf.name_scope('dropout'): keep_prob = tf.placeholder(tf.float32) tf.summary.scalar('dropout_keep_probability', keep_prob) # 重み(係数) def weight_variable(shape): initial = tf.truncated_normal(shape, stddev=0.1) return tf.Variable(initial) # バイアス(定数項) def bias_variable(shape): initial = tf.constant(0.1, shape=shape) return tf.Variable(initial) # for TensorBoard def variable_summaries(var, name): with tf.name_scope('summaries'): mean = tf.reduce_mean(var) tf.summary.scalar('mean/' + name, mean) with tf.name_scope('stddev'): stddev = tf.sqrt(tf.reduce_sum(tf.square(var - mean))) tf.summary.scalar('sttdev/' + name, stddev) tf.summary.scalar('max/' + name, tf.reduce_max(var)) tf.summary.scalar('min/' + name, tf.reduce_min(var)) tf.summary.histogram(name, var) # ニューラル・ネットワークの層 def nn_layer(input_tensor, input_dim, output_dim, layer_name, act=tf.nn.relu): with tf.name_scope(layer_name): with tf.name_scope('weights'): weights = weight_variable([input_dim, output_dim]) variable_summaries(weights, layer_name + '/weights') with tf.name_scope('biases'): biases = bias_variable([output_dim]) variable_summaries(biases, layer_name + '/biases') with tf.name_scope('Wx_plus_b'): preactivate = tf.matmul(input_tensor, weights) + biases tf.summary.histogram(layer_name + '/pre_activations', preactivate) activations = act(preactivate, name='activation') tf.summary.histogram(layer_name + '/acctivations', activations) return activations # 入力層 hidden1 = nn_layer(x, 4, 64, 'layer1') dropped1 = tf.nn.dropout(hidden1, keep_prob) # 中間層 hidden2 = nn_layer(dropped1, 64, 64, 'layer2') dropped2 = tf.nn.dropout(hidden2, keep_prob) # 出力層 y = nn_layer(dropped2, 64, 3, 'layer3', act=tf.nn.softmax) # クロス・エントロピー with tf.name_scope('cross_entropy'): #diff = y_ * tf.log(y) #with tf.name_scope('total'): # cross_entropy = -tf.reduce_mean(diff) cross_entropy = -tf.reduce_sum(y_*tf.log(tf.clip_by_value(y,1e-10,1.0))) tf.summary.scalar('cross entropy', cross_entropy) # 学習 with tf.name_scope('train'): train_step = tf.train.AdamOptimizer(FLAGS.learning_rate).minimize(cross_entropy) # 精度 with tf.name_scope('accuracy'): with tf.name_scope('correct_prediction'): correct_prediction = tf.equal(tf.argmax(y, 1), tf.argmax(y_, 1)) with tf.name_scope('accuracy'): accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32)) tf.summary.scalar('accuracy', accuracy) # for TensorBoard merged = tf.summary.merge_all() train_writer = tf.summary.FileWriter(FLAGS.summaries_dir + '/train', sess.graph) test_writer = tf.summary.FileWriter(FLAGS.summaries_dir + '/test') tf.global_variables_initializer().run() # データとドロップアウト・キープ率の切り替え def feed_dict(train): if train: xs, ys = x_train, y_train k = FLAGS.dropout else: xs, ys = x_test, y_test k = 1.0 return {x: xs, y_: ys, keep_prob: k} # 学習ルーチン for i in range(FLAGS.max_steps): # 学習 sess.run(train_step, feed_dict=feed_dict(True)) # 訓練データに対する評価 summary, acc, cp = sess.run([merged, accuracy, cross_entropy], feed_dict=feed_dict(True)) train_writer.add_summary(summary, i) # テスト・データに対する評価 summary, acc, cp = sess.run([merged, accuracy, cross_entropy], feed_dict=feed_dict(False)) test_writer.add_summary(summary, i) if i == 0 or i % np.power(10, np.floor(np.log10(i))) == 0: print('Accuracy and Cross-Entropy at step %s: %s, %s' % (i, acc, cp)) def main(_): if tf.gfile.Exists(FLAGS.summaries_dir): tf.gfile.DeleteRecursively(FLAGS.summaries_dir) tf.gfile.MakeDirs(FLAGS.summaries_dir) train() if __name__ == '__main__': tf.app.run()

mnist_with_summaries.pyからいくつか変更点があります。

- iris.csvからデータを読み込む

- データをシャッフルして120個を訓練データに、残りの30個をテスト・データにする

- 入力は4次元、出力は3次元

- 中間層(活性化関数はReLU)を追加

- 入力層は4x64ユニット、中間層は64x64ユニット、出力層は64x3ユニット

- クロス・エントロピーの定義を変更(元の定義だとNaNになってしまうことがあるため)

- 10回中9回学習して1回テストを行うのではなく、毎回学習とテストを行う

- 標準出力に精度とクロス・エントロピーを出力

実行 †

(tensorflow) $ python3 tfc_iris.py 2017-06-19 18:32:23.449363: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use SSE4.2 instructions, but these are available on your machine and could speed up CPU computations. 2017-06-19 18:32:23.449387: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use AVX instructions, but these are available on your machine and could speed up CPU computations. 2017-06-19 18:32:23.449393: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use AVX2 instructions, but these are available on your machine and could speed up CPU computations. 2017-06-19 18:32:23.449398: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use FMA instructions, but these are available on your machine and could speed up CPU computations. Accuracy and Cross-Entropy at step 0: 0.366667, 31.8714 Accuracy and Cross-Entropy at step 1: 0.3, 31.5792 Accuracy and Cross-Entropy at step 2: 0.3, 31.289 Accuracy and Cross-Entropy at step 3: 0.3, 31.0007 Accuracy and Cross-Entropy at step 4: 0.3, 30.7225 Accuracy and Cross-Entropy at step 5: 0.333333, 30.4467 Accuracy and Cross-Entropy at step 6: 0.4, 30.1522 Accuracy and Cross-Entropy at step 7: 0.5, 29.8428 Accuracy and Cross-Entropy at step 8: 0.566667, 29.5071 Accuracy and Cross-Entropy at step 9: 0.633333, 29.1437 Accuracy and Cross-Entropy at step 10: 0.666667, 28.7539 Accuracy and Cross-Entropy at step 20: 0.666667, 24.4503 Accuracy and Cross-Entropy at step 30: 0.666667, 19.725 Accuracy and Cross-Entropy at step 40: 0.666667, 15.7528 Accuracy and Cross-Entropy at step 50: 0.866667, 13.1522 Accuracy and Cross-Entropy at step 60: 0.9, 11.3919 Accuracy and Cross-Entropy at step 70: 0.933333, 9.89667 Accuracy and Cross-Entropy at step 80: 0.933333, 8.50913 Accuracy and Cross-Entropy at step 90: 0.933333, 7.18226 Accuracy and Cross-Entropy at step 100: 0.933333, 6.18636 Accuracy and Cross-Entropy at step 200: 0.933333, 4.82435 Accuracy and Cross-Entropy at step 300: 0.933333, 4.7089 Accuracy and Cross-Entropy at step 400: 0.933333, 5.20262 Accuracy and Cross-Entropy at step 500: 0.933333, 6.28935 Accuracy and Cross-Entropy at step 600: 0.933333, 6.46973 Accuracy and Cross-Entropy at step 700: 0.933333, 6.57728 Accuracy and Cross-Entropy at step 800: 0.933333, 7.21051 Accuracy and Cross-Entropy at step 900: 0.933333, 7.32343 Accuracy and Cross-Entropy at step 1000: 0.933333, 7.07634

最初に出ている警告は、CPUのオプションを使って高速に計算できることを教えてくれています。 このオプションを使うには、TensorFlowをソースコードからビルドしてインストールする必要があります。

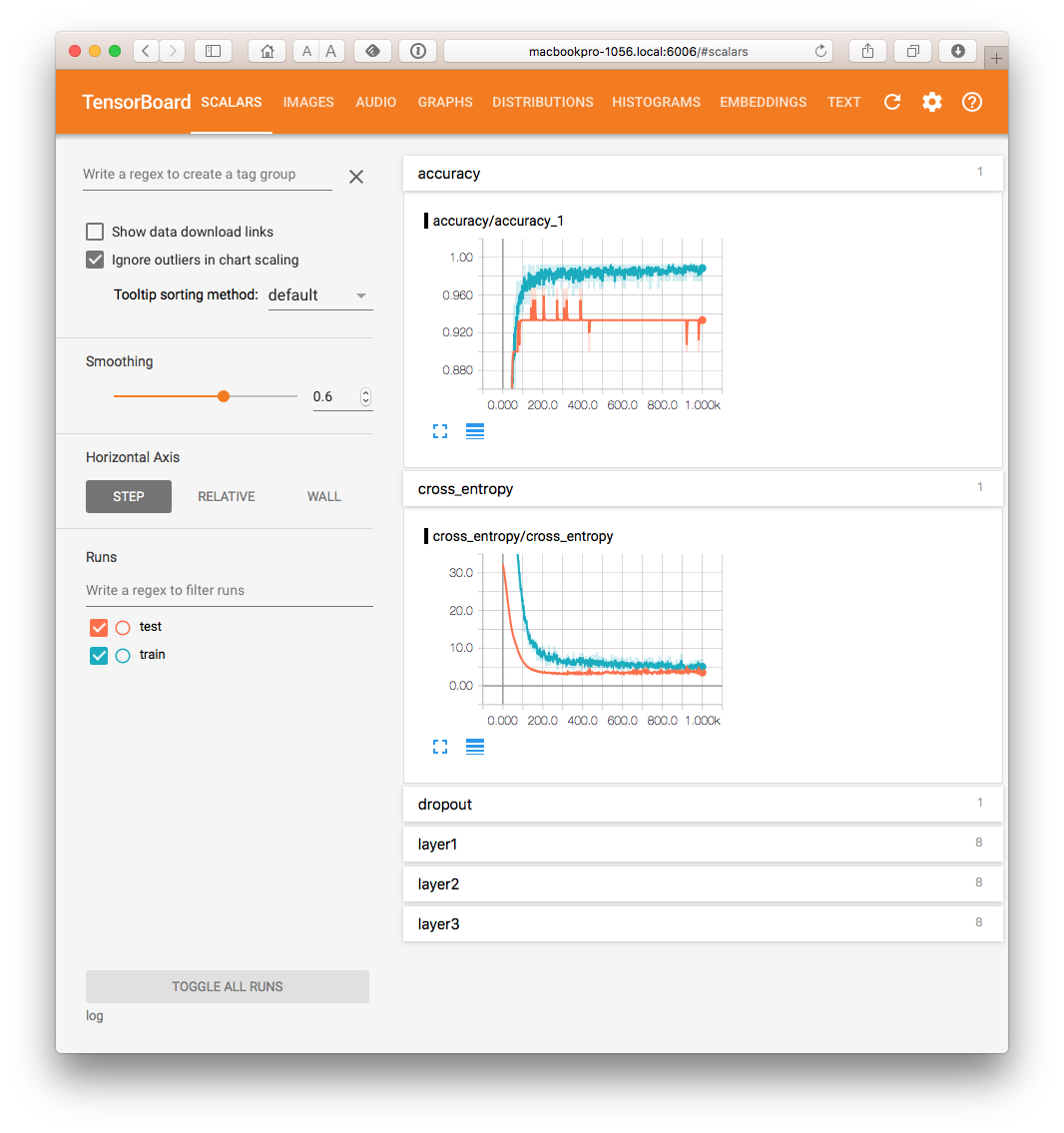

TensorBoardによるログの確認 †

TensorBoardでログを確認します。

次のようにしてTensorBoardを起動すると、6006番ポートでHTTPサーバーが起動します。

(tensorflow) $ tensorboard --logdir=log

Webブラウザーで http://localhost:6006 にアクセスします。

TensorFlowで回帰分析をやってみる †

次に、Housingデータセットで回帰分析をやってみます。

データの準備 †

Housingデータセットは、ボストン近郊の住宅の価格を、地区の犯罪発生率、宅地率など13個の説明変数を用いて予測する問題です。

UCI Machine Learning Repositoryから、 housing.data をダウンロードします。 中身はこんな感じです。

$ head -5 housing.data 0.00632 18.00 2.310 0 0.5380 6.5750 65.20 4.0900 1 296.0 15.30 396.90 4.98 24.00 0.02731 0.00 7.070 0 0.4690 6.4210 78.90 4.9671 2 242.0 17.80 396.90 9.14 21.60 0.02729 0.00 7.070 0 0.4690 7.1850 61.10 4.9671 2 242.0 17.80 392.83 4.03 34.70 0.03237 0.00 2.180 0 0.4580 6.9980 45.80 6.0622 3 222.0 18.70 394.63 2.94 33.40 0.06905 0.00 2.180 0 0.4580 7.1470 54.20 6.0622 3 222.0 18.70 396.90 5.33 36.20

これをコンマ区切りのCSVファイルに変換します。

$ sed "s/^ //" < housing.data | sed "s/ */,/g" > housing.csv

これで、データの準備は完了です。 このデータをdataフォルダーに置いておきます。

回帰分析用ソースコード †

カテゴリー分析用のソースコードを一部変更して作成した回帰分析用のソースコードはこんな感じです。

# Copyright 2016-2017 Tohgoroh Matsui All Rights Reserved. # # Changes: # 2017-06-19 Changed for TensorFlow 1.2 # # # This includes software developed by Google, Inc. # licensed under the Apache License, Version 2.0 (the 'License'); # you may not use this file except in compliance with the License. # You may obtain a copy of the License at # # http://www.apache.org/licenses/LICENSE-2.0 # # Unless required by applicable law or agreed to in writing, software # distributed under the License is distributed on an 'AS IS' BASIS, # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. # See the License for the specific language governing permissions and # limitations under the License. import tensorflow as tf import numpy as np # パラメーター flags = tf.app.flags FLAGS = flags.FLAGS flags.DEFINE_integer('samples', 400, 'Number of training samples.') flags.DEFINE_integer('max_steps', 1001, 'Number of steps to run trainer.') flags.DEFINE_float( 'learning_rate', 0.001, 'Initial learning rate.') flags.DEFINE_float( 'dropout', 0.9, 'Keep probability for training dropout.') flags.DEFINE_string( 'data_dir', 'data', 'Directory for storing data.') flags.DEFINE_string( 'summaries_dir', 'log', 'Summaries directory.') def train(): # CSVファイルの読み込み data = np.genfromtxt(FLAGS.data_dir + '/housing.csv', delimiter=",") # データをシャッフル np.random.shuffle(data) # 学習データ (train) とテストデータ (test) に分割 x_train, x_test = np.vsplit(data[:, 0:13].astype(np.float32), [FLAGS.samples]) y_train, y_test = np.vsplit(data[:,13:14].astype(np.float32), [FLAGS.samples]) # セッション sess = tf.InteractiveSession() # 入力 with tf.name_scope('input'): x = tf.placeholder(tf.float32, shape=[None, 13]) # 出力 with tf.name_scope('output'): y_ = tf.placeholder(tf.float32, shape=[None, 1]) # ドロップアウトのキープ率 with tf.name_scope('dropout'): keep_prob = tf.placeholder("float") tf.summary.scalar('dropout_keep_probability', keep_prob) # 重み(係数) def weight_variable(shape): initial = tf.truncated_normal(shape, stddev=0.1) return tf.Variable(initial) # バイアス(定数項) def bias_variable(shape): initial = tf.constant(0.1, shape=shape) return tf.Variable(initial) # for TensorBoard def variable_summaries(var, name): with tf.name_scope('summaries'): mean = tf.reduce_mean(var) tf.summary.scalar('mean/' + name, mean) with tf.name_scope('stddev'): stddev = tf.sqrt(tf.reduce_sum(tf.square(var - mean))) tf.summary.scalar('sttdev/' + name, stddev) tf.summary.scalar('max/' + name, tf.reduce_max(var)) tf.summary.scalar('min/' + name, tf.reduce_min(var)) tf.summary.histogram(name, var) # ニューラル・ネットワークの層 def nn_layer(input_tensor, input_dim, output_dim, layer_name, relu=True): with tf.name_scope(layer_name): with tf.name_scope('weights'): weights = weight_variable([input_dim, output_dim]) variable_summaries(weights, layer_name + '/weights') with tf.name_scope('biases'): biases = bias_variable([output_dim]) variable_summaries(biases, layer_name + '/biases') with tf.name_scope('Wx_plus_b'): preactivate = tf.matmul(input_tensor, weights) + biases tf.summary.histogram(layer_name + '/pre_activations', preactivate) if relu: activations = tf.nn.relu(preactivate, name='activation') else: activations = preactivate tf.summary.histogram(layer_name + '/acctivations', activations) return activations # 入力層 hidden1 = nn_layer(x, 13, 64, 'layer1') dropped1 = tf.nn.dropout(hidden1, keep_prob) # 中間層 hidden2 = nn_layer(dropped1, 64, 64, 'layer2') dropped2 = tf.nn.dropout(hidden2, keep_prob) # 出力層 y = nn_layer(dropped2, 64, 1, 'layer3', relu=False) # 損失 with tf.name_scope('loss'): loss = tf.reduce_mean(tf.square(y_ - y)) tf.summary.scalar('loss', loss) # 学習 with tf.name_scope('train'): train_step = tf.train.AdagradOptimizer(FLAGS.learning_rate).minimize(loss) # for TensorBoard merged = tf.summary.merge_all() train_writer = tf.summary.FileWriter(FLAGS.summaries_dir + '/train', sess.graph) test_writer = tf.summary.FileWriter(FLAGS.summaries_dir + '/test') tf.global_variables_initializer().run() # データとドロップアウト・キープ率の切り替え def feed_dict(train): if train: xs, ys = x_train, y_train k = FLAGS.dropout else: xs, ys = x_test, y_test k = 1.0 return {x: xs, y_: ys, keep_prob: k} # 学習ルーチン for i in range(FLAGS.max_steps): # 学習 sess.run(train_step, feed_dict=feed_dict(True)) # 訓練データに対する評価 summary, train_loss = sess.run([merged, loss], feed_dict=feed_dict(True)) train_writer.add_summary(summary, i) # テスト・データに対する評価 summary, test_loss, est = sess.run([merged, loss, y], feed_dict=feed_dict(False)) test_writer.add_summary(summary, i) if i == 0 or i % np.power(10, np.floor(np.log10(i))) == 0: print('Train loss and test loss at step %s: %s, %s' % (i, train_loss, test_loss)) def main(_): if tf.gfile.Exists(FLAGS.summaries_dir): tf.gfile.DeleteRecursively(FLAGS.summaries_dir) tf.gfile.MakeDirs(FLAGS.summaries_dir) train() if __name__ == '__main__': tf.app.run()

カテゴリー分析用のソースコードからの変更点は以下のようなものです。

- housing.csvからデータを読み込む

- 訓練データを400個、残りをテスト・データに

- 入力ユニット13個、出力ユニット1個

- 出力層の活性化関数はなし(線形和をそのまま出力)

- 目的関数は平均二乗誤差

- 最適化アルゴリズムはAdagradOptimizer

実行 †

(tensorflow) $ python3 tfr_housing.py 2017-06-19 19:18:56.446456: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use SSE4.2 instructions, but these are available on your machine and could speed up CPU computations. 2017-06-19 19:18:56.446483: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use AVX instructions, but these are available on your machine and could speed up CPU computations. 2017-06-19 19:18:56.446489: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use AVX2 instructions, but these are available on your machine and could speed up CPU computations. 2017-06-19 19:18:56.446494: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use FMA instructions, but these are available on your machine and could speed up CPU computations. Train loss and test loss at step 0: 224.93, 130.847 Train loss and test loss at step 1: 183.852, 85.3385 Train loss and test loss at step 2: 168.604, 68.0674 Train loss and test loss at step 3: 148.723, 60.0659 Train loss and test loss at step 4: 133.088, 56.0457 Train loss and test loss at step 5: 136.931, 53.1182 Train loss and test loss at step 6: 130.554, 51.7777 Train loss and test loss at step 7: 133.33, 50.4604 Train loss and test loss at step 8: 124.398, 49.5372 Train loss and test loss at step 9: 153.345, 48.7853 Train loss and test loss at step 10: 126.658, 48.1853 Train loss and test loss at step 20: 131.693, 44.5592 Train loss and test loss at step 30: 115.671, 43.4062 Train loss and test loss at step 40: 112.891, 43.2623 Train loss and test loss at step 50: 113.978, 43.1277 Train loss and test loss at step 60: 114.35, 43.1381 Train loss and test loss at step 70: 96.5845, 43.1183 Train loss and test loss at step 80: 102.218, 43.1819 Train loss and test loss at step 90: 105.793, 43.2823 Train loss and test loss at step 100: 105.103, 43.2404 Train loss and test loss at step 200: 96.8645, 42.1962 Train loss and test loss at step 300: 81.3204, 40.8889 Train loss and test loss at step 400: 75.9416, 40.0916 Train loss and test loss at step 500: 83.1751, 39.7313 Train loss and test loss at step 600: 77.3653, 39.0446 Train loss and test loss at step 700: 71.6672, 38.6893 Train loss and test loss at step 800: 74.5351, 38.3223 Train loss and test loss at step 900: 76.4663, 37.7926 Train loss and test loss at step 1000: 66.521, 37.3258

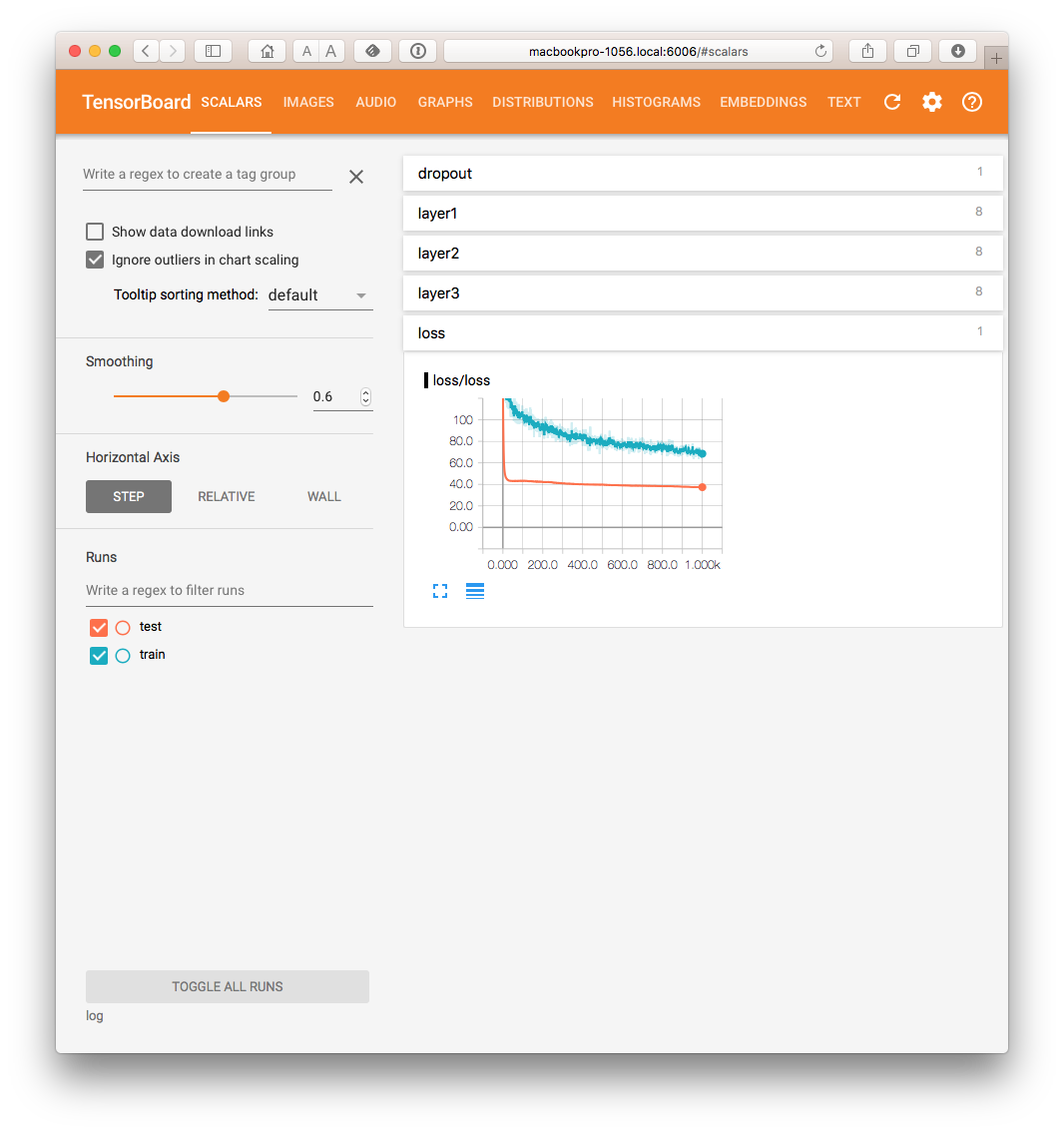

TensorBoardによるログの確認 †

カテゴリー分析と同じです。

(tensorflow) $ tensorboard --logdir=log

![[PukiWiki] [PukiWiki]](https://xn--p8ja5bwe1i.jp:443/wiki/image/banner.png)